|

GenAI Music: Napster 2.0 or a New Note In Music History? The Music Industry Faces Yet Another Challenge from New Technology—How will it Adapt? |

Abstract

Like many other industries, the music industry is undergoing rapid disruption due to the adoption of generative AI. Services like Endel use AI to create personalized, adaptable soundtracks for different moods and activities[1]. Paul McCartney even used AI to recreate John Lennon's vocals for a new Beatles track[2]. However, the music industry presents an especially unique battleground for GenAI disruption—a tussle between human creativity and automation. In this paper we present challenges with the music stakeholders ecosystem, and a prediction on how the music industry will evolve in the near future (6-18 months).

Unique Challenges With GenAI Music

The interplay between artistic value and a complex economic ecosystem makes the music industry a fascinating case study for how GenAI will reshape creative fields. There have traditionally been 4 stakeholders:

Artists: They typically own the copyright to the composition (the underlying music and lyrics), but often sign deals with record labels that grant the label ownership of the master recording (the specific recording of the song). This means the label controls how the song is distributed, marketed, and monetized. In return, the artist receives royalties from sales and streams.

Record Labels: These companies own the master recordings and invest heavily in artist development, production, marketing, and distribution. They profit by selling music (physical and digital), licensing it for use in movies, commercials, etc., and collecting streaming royalties.

Distributors: These companies act as middlemen, getting the music from labels to retailers (physical and digital stores) and streaming platforms. They often take a cut of the sales or streams for their services.

End Customers - The ultimate consumer of music products and services. They are the individuals who purchase albums, download tracks, stream music, attend concerts, and ultimately drive the demand for music content. They influence trends and preferences, shaping the direction of the music industry.

Music Tech Players (new entrant) - The music tech landscape is evolving faster than the music itself with new tools and techniques emerging regularly. Users can now create music using text or transfer existing music styles and apply them to new compositions, creating unique and interesting sounds. As Ted Gioia predicts, the next revolution of music may be funded by venture capitalists[3]. Some of the notable tech companies advancing the tech are:

Suno: Suno is a music generation tool that can create full songs, including vocals and instrumentals, from text prompts. They offer a mobile app (currently only available for iOS in the US) with tools designed for music creation, sharing, and learning. Suno caters to anyone interested in making music, from beginners to more experienced creators. In July 2024, Suno highlighted that over 12M users had used Suno to date[4].

Udio: Udio is very similar to Suno in that it allows generation of full songs. Customers can provide a text prompt describing their desired music, and Udio generates the audio, including vocals and instruments. By May 2024, Udio, whose other investors include will.i.am and UnitedMasters attracted significant attention, reporting that over 600,000 people tested the platform in its opening two weeks of public availability[5].

Music FX by Google: In the Google AI Kitchen Lab, users can create music by entering descriptive prompts, allowing for personalized compositions based on specific genres or mood. Users can create tunes up to 70 seconds in length and music loops, explore prompts with expressive clips, and download or share their creations with friends. Since launch, people have used the tool to create more than 10 million tracks.[6].

The advancements in tech have disrupted this ecosystem and have ushered in a new era for the music industry, one marked by both immense potential and significant challenges. The entrant of this new stakeholder, “Music Tech,” has sparked a few debates:

1. Art vs. Algorithm: Music is a deeply creative field that thrives on human expression and originality. While GenAI can generate impressive compositions, it raises questions about the soul and authenticity of music created by algorithms. Will audiences embrace AI-made music, or will they crave the human touch?

2. Assisted vs Generated: Music production tools have long enabled musicians to make music more efficiently. From electronic equipment, to mixing tools, to the ability edit audio streams, making music has progressively gotten easier. However, with AI music generation the line between assistance and generation can be blurry and subjective, because the input required to generate music is trivial, or the output has been trained on countless hours of human creativity, or both. If a human takes an AI generated track and modifies it significantly, is that considered original? What if it is the other way around? Who owns the copyright?

3. Quality vs Quantity: AI music tools, like other tech breakthroughs including the internet or the mobile phone, will make music more accessible to a much wider audience. This will mean that we will see music from budding artists that were held back by their inability to express their creativity through traditional means, and/or a lack of resources. We should also expect to see a torrent of low quality music that arguably does not add to the creative knowledge of the world and may break existing paradigms of music discovery and consumption.

4. Entrenched Value Chain: The music industry has a long-established structure involving artists, labels, and distributors. GenAI tools could dis-intermediate this system, empowering artists directly but also potentially disrupting the income streams of established players.

Two Sides Of The Argument

While these advancements are exciting, there are increasing concerns from artists and record labels. On June 24, 2024, the Recording Industry Association of America (RIAA) filed lawsuits against Suno AI and Uncharted Labs (Udio AI). The labels, including Sony, Universal, and Warner Music, allege these startups used vast amounts of copyrighted recordings to train their AI for music generation without permission[7]. “AI companies, like all other enterprises, must abide by the laws that protect human creativity and ingenuity,” the complaints against Suno and Udio state. “There is nothing that exempts AI technology from copyright law or that excuses AI companies from playing by the rules.” That assertion is likely to be the key point of contention in the lawsuits. US courts have not yet ruled on whether using copyrighted materials to train AI amounts to copyright infringement.[8]

Before we delve into Suno and Udio's responses, let's explore the legal framework of copyright infringement. Music copyright infringement happens when someone uses a part of a song, or even an entire song, without permission from the copyright owner. This can apply to the melody, rhythm, lyrics, or even a combination of these elements. However, determining if music is too similar for copyright purposes requires a nuanced understanding of what originality is protected. Below are a few US Court decisions on music copyright infringement:

In March 2015, a U.S. District Court for the Central District of California jury ruled that Robin Thicke and Pharrell Williams’s song Blurred Lines infringed on Marvin Gaye’s song Got to Give It Up, which was written more than thirty years prior. The jury found that Thicke and Williams’s song infringed on Gaye’s song primarily on the grounds that the two songs were “substantially similar”[9].

Around the same time, Sam Smith and Tom Petty reached a settlement wherein Smith agreed to give Petty songwriting credit and pay him royalties for Smith’s 2014 song Stay With Me because, allegedly, it was substantially similar to Petty’s 1989 hit I Won’t Back Down. Neither of the songs shared the same lyrics, key, tempo, or rhythm; the only similarity between the two songs was the progression[10].

Common themes and arguments across these lawsuits are:

Originality vs. Influence: Music builds on itself. It's common for artists to be inspired by others. Copyright law protects the unique expression of ideas, but not the ideas themselves. So, a similar chord progression or rhythmic pattern might not be infringement, especially if it's used in a different context.

Substantial Similarity: Courts look for "substantial similarity" between the copyrighted work and the allegedly infringing one. This considers the overall impression of the music, not just einzelne (German for "individual") notes or elements. It's a judgment call that can be subjective.

Fair Use: There's a concept called "fair use" that allows limited use of copyrighted material without permission for purposes like criticism, commentary, parody, or news reporting. How much constitutes "fair use" can be complex and depends on the specific situation.

In their response to the lawsuit, Suno and Udio claim that their music is original and that they use AI to analyze and understand different musical styles, but don't directly copy any specific songs. They also argue that copyright doesn't apply to musical styles and that their use of the material falls within “fair use” exemptions, accusing the recording companies of launching the lawsuits to prevent competition. They say anyone can use elements of different genres (like jazz or pop) to create new music, and that copyright only applies to specific recordings of songs, not the underlying genres themselves[11]. Suno claims, “..just like the kid writing their own rock songs after listening to the genre — or a teacher or a journalist reviewing existing materials to draw new insights — learning is not infringing. It never has been, and it is not now.”[12]

Our Perspective: GenAI + Music—It's Only A Matter Of Time

With every new innovation, the status quo is disrupted with established mechanisms and new ideas engaging in a back and forth until they reach a healthy state of equilibrium, fostering the next wave of growth and innovation. This has played out across industries – the automobile disrupted the horse-drawn carriage industry, television disrupted the radio industry, the internet disrupted traditional print media, and more recently, ride-sharing services like Uber and Lyft have challenged traditional taxi and public transportation systems - and we believe this will be true for GenAI’s interplay with the music ecosystem.

The music industry is no stranger to disruption due to technology. Sampling and Napster were moments when technology enabled new ways of interacting with music, often in defiance of established legal norms, before eventually becoming accepted through new legal frameworks and cultural shifts. Both have now become accepted and inherent parts of the music experience - the vast majority of music consumption takes place on the major streaming platforms[13] and a large percentage of hits sample from previously released songs[14]. Specifically, sampling is a great counter to the bear case around creativity and soul-less music while Napster and streaming is a great example of how a sustainable legal ecosystem can emerge from something that was viewed as undercutting artists’ revenue streams and of course, was 100% illegal. Unlike the Napster days, the music industry generally and labels in particular appear to be excited about AI and are striving to get ahead to identify ways to work with it (instead of fighting it); that is additional wind at GenAI’s back.

The authors believe the music ecosystem will evolve to an equilibrium where GenAI is accepted, enhancing creativity, promoting access, lowering costs, and allowing human creators to engage audiences while supporting their passion and livelihood.

How This Shift Will Take Place

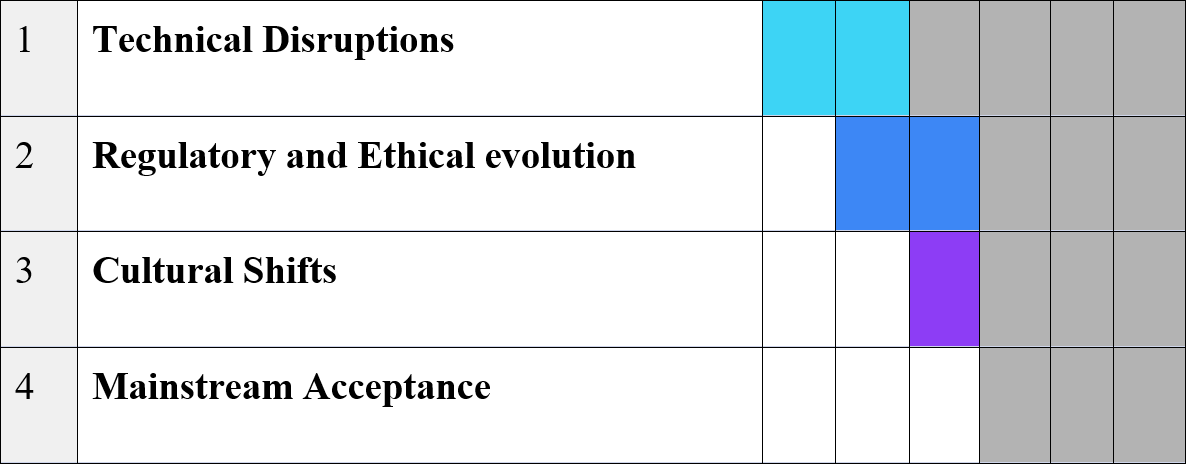

Like streaming and sampling did, GenAI adoption will likely undergo a complex evolution, marked by technological advancements, evolving regulations, ethical considerations, cultural shifts, and eventual mainstream acceptance. This process, though often sequential, can be messy, overlapping, and take several years. Currently, we are well and truly in the technical disruption phase (still closer to the start than the finish) and entering the regulatory and ethical evolution phase.

Fig 1.0 - Table showing overlapping phases of adoption of new technology

In the next 6-18 months, we anticipate following trends which will lead to cultural shift and eventual mainstream acceptance of this new Tech:

1. Social acceptance: We will begin to see social acceptance among artists and end customers. AI-assisted music generation will allow artists to create music faster, iterate more efficiently, and explore new creative possibilities that were previously difficult or time-consuming. Instead of replacing human creativity, AI will become a tool that enhances it— by generating instrumental layers, suggesting melodic variations, or even analyzing listener data to tailor songs to specific audiences. Most artists also will use AI productivity tools to speed up the songwriting and recording processes. Users are already loving these new AI-generated songs. In Apr 2023, "Heart on My Sleeve." went viral and topped the charts[15]. Some user-created tracks on Suno have reached up to 900K streams, indicating substantial audience engagement with AI-generated music.

2. Increased utility: GenAI will democratize music creation, enabling upcoming artists with limited access to expensive production tools or formal training to create professional-quality music. This will allow for more voices and diversity in the music industry, particularly among artists from underrepresented backgrounds or those with disabilities who may not have had access to traditional musical education or equipment. For more established artists, GenAI will be a huge productivity booster, enabling them to create and experiment at unprecedented scale and speed.

3. Establishment of ethics: Music Tech will move towards building ethical AI models due to a combination of market forces, regulation, and altruism. This will mean the AI models will be trained on datasets of music that are explicitly in the public domain or have royalty-free licenses ensuring the underlying material used for training is freely usable. There will be advancements in tech to filter out copyrighted material from training datasets. In addition, legal experts and musicians will have a larger say in establishing guidelines that ensure fair use of AI technologies and protect the rights of creators.

4. New economic models: Tech companies will likely develop tools to trace the origins of training data, allowing artists to receive proper credit and compensation for their work. This could lead to a tiered market where consumers are willing to pay more for music created primarily by humans. Just like handmade items are considered artisanal and valued higher than manufactured items, it is possible that we will see the rise of tiered music offerings, with customers willing to pay a premium for unassisted human creativity.

5. Litigation to collaboration: We believe this push from the end customers, artists, and new economic models will force record labels and publishers to rethink their business models. They will seek to become innovators by building proprietary AI music systems that both generate new revenue streams and protect their catalogs. They will balance use of AI to enable a higher volume of releases while maintaining quality.

With this cultural shift, all stakeholders in the ecosystem will begin to expect more out of their AI experiences—a reflection of mainstream acceptance. End customers will expect more out of live performances. This will make live performances more valuable and artists will increasingly invest in creating unique, immersive live experiences that cannot be replicated by AI. Fans will be drawn to these events as a way to experience authentic human artistry. Moreover, artists may use AI to enhance live performances with dynamic visuals or real-time musical adjustments, creating more interactive shows that significantly heighten the emotional impact and resulting customer satisfaction of the event. Record labels will capitalize on this by offering AI-generated music services allowing them to tap into new revenue streams and reduce overheads for smaller projects. With AI exponentially increasing the amount of content available, traditional streaming platforms will see their catalogs grow by orders of magnitude. They will turn to superfan engagement for revenue, growth, and brand visibility[16]. Streaming platforms will use GenAI for deeper personalization, enabling users to explore niche subcultures and uncover hidden gems that align with their unique tastes, enhancing the diversity and richness of the music ecosystem.

Conclusion

The long-term prospects of GenAI music are uncertain, as is its potential to spark a Renaissance-level creative explosion. However, its impact on the music industry is undeniable. While authenticity and human connection may continue to drive consumer preferences, GenAI's ability to evolve and potentially replicate these qualities remains an intriguing possibility. Ultimately, customers decide what music they want to listen to. In a marketplace with a burgeoning selection of both AI-generated and human-made music, listeners will vote with their time, attention, and money. By supporting certain types of content, listeners will guide which styles, genres, and experiences flourish. This dynamic consumer choice will push the industry to cater more closely to user preferences, effectively giving consumers greater influence over what rises to prominence and creating a new equilibrium.

Copyright Notice

Copyright ©2024 by Siddharth Kashiramka

This article was published in the Journal of Business and Artificial Intelligence under the "gold" open access model, where authors retain the copyright of their articles. The author grants us a license to publish the article under a Creative Commons (CC) license, which allows the work to be freely accessed, shared, and used under certain conditions. This model encourages wider dissemination and use of the work while allowing the author to maintain control over their intellectual property.

About the Author

Siddharth (Sid) Kashiramka

Sid is a Product Leader at Amazon Artificial General Intelligence (AGI) building next-gen Large-Language Models (LLMs). Prior to Amazon, Sid has led AI/ML teams at Capital One and advised several Fortune 50 clients working as a digital strategy consultant at PwC. He serves as a strategic mentor to multiple startups coming out of the Lighthouse Labs. Sid holds a bachelor's degree in computer science engineering from DeviAhilya University, India and a masters in business administration from Emory University, Atlanta..

About the Journal

The Journal of Business and Artificial Intelligence (ISSN: 2995-5971) is the leading publication at the nexus of artificial intelligence (AI) and business practices. Our primary goal is to serve as a premier forum for the dissemination of practical, case-study-based insights into how AI can be effectively applied to various business problems. The journal focuses on a wide array of topics, including product development, market research, discovery, sales & marketing, compliance, and manufacturing & supply chain. By providing in-depth analyses and showcasing innovative applications of AI, we seek to guide businesses in harnessing AI's potential to optimize their operations and strategies.

In addition to these areas, the journal places a significant emphasis on how AI can aid in scaling organizations, enhancing revenue growth, financial forecasting, and all facets of sales, sales operations, and business operations. We cater to a diverse readership that ranges from AI professionals and business executives to academic researchers and policymakers. By presenting well-researched case studies and empirical data, The Journal of Business and Artificial Intelligence is an invaluable resource that not only informs but also inspires new, transformative approaches in the rapidly evolving landscape of business and technology. Our overarching aim is to bridge the gap between theoretical AI advancements and their practical, profitable applications in the business world.